Human-Centered Artificial Intelligence

Human-Centered Artificial Intelligence

Ben Shneiderman, April 19, 2022, ben@cs.umd.edu

BOOK | INTRODUCTION | VIDEOS | TUTORIAL | THE FOUR PAPERS | RELATED MATERIAL

Book

Ben Shneiderman’s book on Human-Centered AI, was published by Oxford University Press in February 2022. It greatly expands on the contents of the earlier papers described below.

Review in UK Popular Science (January 13, 2022) by Brian Clegg, a noted UK science writer. It makes some sharp points, but happily ends “it is one of the most important AI books of the last few years.”

It got multiple supportive reviews but this strong review in Forbes made a big difference: “an excellent introduction to the concepts of HCAI… Human-centered AI is an important concept. This book is a heavy introduction, and many parts of it will be useful to different audiences. Students, in academia and business, can read it all, but it is still valuable to management who need to both understand how to better direct AI development and to require appropriate AI to solve market and social challenges.” After the review, the book jumped to #6 in Amazon’s Top AI books and #8 in Top HCI books, but it has fallen back a bit.

Other reviews and extracts were published in websites such as Engadget, The Next Web, and Computers and Society Blog.

Table of Contents and Introduction.

Introduction

Artificial Intelligence (AI) dreams and nightmares, represented in popular culture through books, games, and movies, evoke images of startling advances as well as terrifying possibilities. In both cases, people are no longer in charge; the machines rule. However, there is a third possibility; an alternative future filled with computing devices that dramatically amplify human abilities, empowering people and ensuring human control. This compelling prospect, called Human-Centered AI (HCAI), enables people to see, think, create, and act in extraordinary ways, by combining potent user experiences with embedded AI support services that users want.

The HCAI framework bridges the gap between ethics and practice with specific recommendations for making successful technologies that augment, amplify, empower, and enhance humans rather than replace them. This shift in thinking could lead to a safer, more understandable, and more manageable future. An HCAI approach will reduce the prospects for out-of-control technologies, calm fears of robot-driven unemployment, and diminish the threats to privacy and security. A human-centered future will also support human values, respect human dignity, and raise appreciation for the human capacities that bring creative discoveries and inventions.

Audience and goals: This fresh vision is meant as a guide for researchers, educators, designers, programmers, managers, and policy makers in shifting toward language, imagery, and ideas that advance a human-centered approach. Putting people at the center will lead to the creation of powerful tools, convenient appliances, and well-designed products and traditional services that empower people, build their self-efficacy, clarify their responsibility, and support their creativity.

A bright future awaits AI researchers, developers, business leaders, policy makers and others who expand their goals to include Human-Centered AI (HCAI) ways of thinking. This enlarged vision can shape the future of technology so as to better serve human needs. In addition, educators, designers, software engineers, programmers, product managers, evaluators, and government agency staffers can all contribute to novel technologies that make life better for people. Humans have always been tool builders, and now they are supertool builders, whose innovations can improve our health, family life, education, business, environment, and much more.

The high expectations and impressive results from Artificial Intelligence (AI) have triggered intense worldwide research and development. The promise of startling advances from machine learning and other algorithms energizes discussions, while eliciting huge investments in medical, manufacturing, and military innovations.

The AI community’s impact is likely to grow even larger by embracing a human-centered future, filled with supertools that amplify human abilities, empowering people in remarkable ways. This compelling prospect of Human-Centered AI (HCAI), builds on AI methods, enabling people to see, think, create, and act with extraordinary clarity. HCAI technologies bring superhuman capabilities, augmenting human creativity, while raising human performance and self-efficacy.

Join the Human-Centered AI Google Group: https://groups.google.com/g/

Follow @HumanCenteredAI on Twitter.

Videos

- Imperial College London, February 17, 2021, Human-Centered AI: Reliable, Safe & Trustworthy (60min)

- ACM Intelligence User Interfaces Conference, April 13, 2021, https://iui.acm.org/2021/hcai_tutorial.html (180min)

- This 3-hour tutorial proposes a new synthesis, in which Artificial Intelligence (AI) algorithms are combined with human-centered thinking to make Human-Centered AI (HCAI). This approach combines research on AI algorithms with user experience design methods to shape technologies that amplify, augment, empower, and enhance human performance. Researchers and developers for HCAI systems value meaningful human control, putting people first by serving human needs, values, and goals.

- BayCHI Meeting June 8, 2021 Human-Centered AI: Reliable, Safe & Trustworthy (95min)

- HCAI framework, which shows how it is possible to have both high levels of human control and high levels of automation.

- Design metaphors emphasizing powerful supertools, active appliances, tele-operated devices, and information abundant displays.

- Governance structures to guide software engineering teams, safety culture lessons for managers, independent oversight to build trust, and government regulation to accelerate innovation.

- Adela Quniones, Global Head of News Automation and Analytics at Bloomberg: A Conversation with Ben Shneiderman about Human Centered AI (CHI 2021 Conference), published October 5, 2021 (40min)

Tutorial

- April 13, 2021: ACM Conference on Intelligent User Interfaces

- May 27, 2021: University of Maryland HCIL Annual Symposium

The Three Papers

- HCAI Framework for Reliable, Safe, and Trustworthy Design: The traditional one-dimensional view of levels of autonomy, suggests that more automation means less human control. The two-dimensional HCAI framework shows how creative designers can imagine highly automated systems that keep people in control. It separates human control from computer automation allowing high levels of human control AND high levels of automation.

- Shift from emulating humans to empowering people: The two central goals of AI research — emulating human behavior (AI science) and developing useful applications (AI engineering) — are both valuable, but that designers go astray when the lessons of the first goal are put to work on the second goal. Often the emulation goal encouraged beliefs that machines should be designed to be like people, when the application goal might be better served by providing comprehensible, predictable, and controllable designs. While there is an understandable attraction for some researchers and designers to make computers that are intelligent, autonomous, and human-like, those desires should be balanced by a recognition that many users want to be in control of technologies that support their abilities, raise their self-efficacy, respect their responsibility, and enable their creativity.

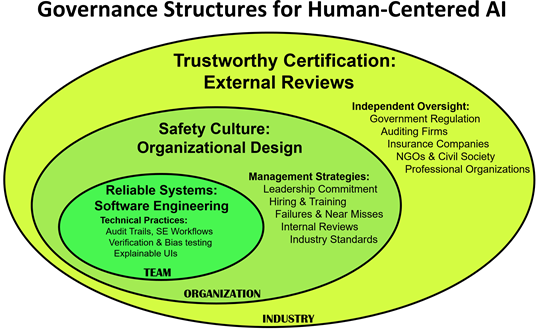

- Fifteen recommendations for AI governance: Successful HCAI systems will be accelerated when designers and developers learn how to bridge the gap between ethics and practice. The recommendations suggest how to adapt proven software engineering team practices, management strategies, and independent oversight methods. These new strategies guide software team leaders, business managers, and organization leaders in the methods to develop HCAI products and services that are driven by three goals:

- Reliable systems based on proven software engineering practices,

- Safety culture through business management strategies, and

- Trustworthy certification by independent oversight.

Trustworthy certification by industry, though subject to government interventions and regulation, can be done in ways that increase innovation. However, other methods to increase trustworthiness are for accounting firms to conduct independent audits, insurance companies to compensate for failures, non-governmental and civil society organizations to advance design principles, and professional organizations to develop voluntary standards and prudent policies.

Related Material:

- National Academy of Engineering Perspective (April 7, 2022): Ensuring Human Control over AI-Infused Systems

- Sparks of Innovation publishes controversial guidelines (September 9, 2021): Ten Old Beliefs and New Ideas: Steps toward Human-Centered AI

- Responsible AI: Bridging from Ethics to Practice, Communications of the ACM 64, 8 (August 2021), 32-35. Video summary.

- National Academy of Science policy magazine ISSUES (Winter 2021) has Ben Shneiderman’s article on Human-Centered AI.

- Shneiderman, B., Human-Centered AI: A second Copernican Revolution, AIS Transactions on Human-Computer Interaction 12, 3 (September 2020).

- HCIL Workshop on Human-Centered AI Multiple videos (May 2020).

- Paper on Independent Oversight for Algorithms (PNAS, 2016): The dangers of faulty, biased, or malicious algorithms requires independent oversight.

- List of courses and resources on HCAI